Articles

| Name | Author |

|---|

Case Study: Supporting key operational transformations at Alaska Airlines

Author: Ryan Papineau Automated Test Engineer, Alaska Airlines, Jeff Crose, Software Development Engineer in Test, Alaska Airlines

Subscribe

Ryan Papineau, Automated Test Engineer, Alaska Airlines Jeff Crose, Software Development Engineer in Test, Alaska Airlines share how the airline has used accurate test data and service virtualization

Although the theme of this article is testing and virtualization within the airline space, perhaps it will be useful to start by explaining a little about Alaska Airlines.

ALASKA AIRLINES

Contrary to popular belief, we cannot see Polar Bears outside of our office window but the airline’s name does reflect its origins and its pioneering spirit, albeit that our headquarters are in Seattle, Washington State. With the recent acquisition of Virgin America, Alaska has become the fifth largest US airline, transporting over 44 million guests a year. We maintain a young operational fleet of 166 Boeing 737 aircraft, 71 Airbus A320 family aircraft, 35 Bombardier Q400 aircraft, and 26 Embraer 175 aircraft. The fleet serves regional and main line operations in the group and connects with about 118 cities in the United States, Mexico, Canada and Costa Rica. There are more than 23,000 employees in the airline.

A TECHNOLOGY JOURNEY

Readers will already be aware of the digital transformation that our industry is facing, and will most probably have already embarked upon that journey. As an eighty-seven year old airline, Alaska Airlines started before the computer age. Much of our original processes were all analog using paper and pencil, as we moved towards the future and evolved, a lot of those processes became digitized. However, they were still pretty much one-to-one equivalents of the analog processes that they replaced. So, as we move further forward into the future remaining competitive, we are focusing to an increasing degree on mobile and Cloud development as well as on business intelligence (BI) to help us provide more customer-led solutions and a better experience for our customers.

Figure 1

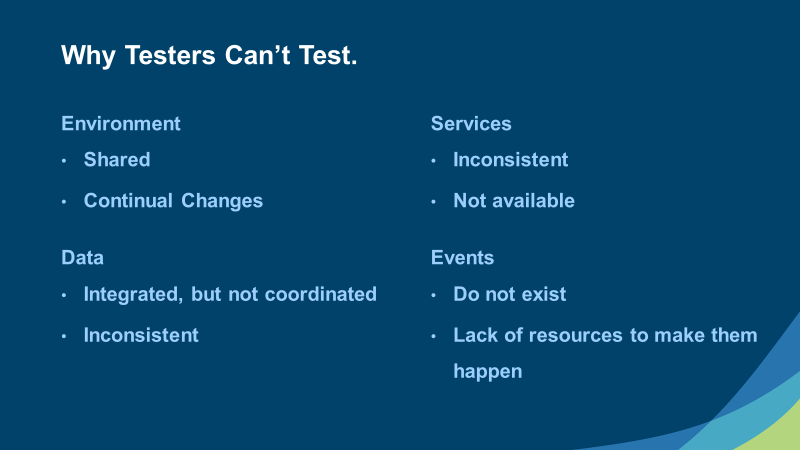

As an airline, Alaska interacts with many systems to offer our customers a quality product. Some have been internally developed and some have been bought from vendors with whom readers will be familiar (Sita – Aircomm; Smart4Aviation – SmartLoad,SmartComm,SmartSuite; eMRO – Trax; Jeppesen – Jeppesen Crew Tracking; Champ – CargoSpot; Sabre – PSS). We have aircraft, crew, cargo, baggage, fuel applications. However, when we brought all of these independent applications (figure 1) together to support our new Weight & Balance system, we started to face many challenges which we collectively call ‘Why testers can’t test’ (figure 2).

Figure 2

This complex system is really a collection of separate applications. The environment itself presents many new challenges. Most prominently, it is shared and subject to continual change. Our data may need to refresh from time to time or risk being inconsistent. There are also dependent services which interact differently with the environment and might not have the right data; or there are events that do not exist. We refer to this environment problem as ‘integrated, but not coordinated’.

OVERCOMING THE CHALLEGES AND PROBLEMS

These challenges were hard to overcome in the test environment. This is the core theme of our article; to share with readers how these problems were solved.

Environmental challenges and solutions

Our first challenge was the environment itself or, more specifically, that it’s shared. There were other teams producing and deploying new code so that, when there was a failure, it meant that we were using a lot of time for troubleshooting; was it our code? Was it another team’s code? Although we were working together, it was taking time and some cycles to figure out the root cause for these failures. Besides the frequent changes, the failures also exposed that there were missing dependencies. By the time that we found out that the problem was with another but interdependent service, we discovered that those applications had missing dependencies. (AKA Dependency Hell?) Though it seems insurmountable, eventually all were resolved.

The first part of our solution was to isolate our project from the other projects by creating our own environment and, using virtual machines; we had our own isolated deploy types to keep that environment separate so that there wouldn’t be cross-pollination between our environment and the other environments, our deploy type became known as certification (CERT). Another very important piece of our solution was people; one of our leadership principals at that time was to develop an understanding of ‘the people, the process, the technology’. This involved bringing in a release manager to keep all the dependent code bases in sync and help maintain the complex catalog of all these applications. Due to licensing cost, or other constraints some of these applications simply did not exist, or could not be deployed to our environment, we will cover that later when on the topic of service virtualization. This exercise of identifying all applications and their dependencies for our weight and balance system allowed us to create thorough architectural diagrams and application/service catalog for our CERT environment. Once we had finally ironed out the environmental challenges and had that working for us, the next challenge was, what were we going to do for data?

Data challenges and solutions

As readers will be aware, there are a lot of moving parts to an airline as highlighted above, so one of the challenges that we faced was that data is constantly moving but only useful up until the flight lands. After that, it becomes stale and no longer useful for testing, in addition the availability of data is also a challenge. There might be a few cancellations on one day, there might be certain passenger loads on certain routes on another day, there might be live animals and/or dangerous goods in the cargo hold on another day but trying to test these scenarios on a regular basis became a challenge because you might not find all of them on any given day.

On top of that, I’m sure if any readers are suffering from technical debt, perhaps have a lot of legacy systems in place you’ll find that they have been developed over the years by several different developers with many different opinions, contractors, etc. There might also be some design problems along the way. Readers will be aware of GDPR and, in the United States, California is implementing its own data privacy policy legislation, the California Consumer Privacy Act, which will take effect in 2020. The protection of personal data is one of Alaska Airways’ key concerns.

Historically, we were using production data for testing. And, of course, when you are trying to do testing more regularly, you have to consider the cost of time loss from doing database refreshes continually. In Alaska Airlines’, as in any airline, data came in the form of multiple relationship database platforms, flat file sources, and so on. Refreshing took a lot of time and cost every day. Plus, we also had to synchronize the data across multiple platforms to keep it in line across the baggage system, the passenger system and the flight operations management system.

Alaska Airlines leveraged the concept of Test Data Management, the process of bringing data under control, and achieved this through a combination of internally developed SQL scripts as well as procuring a commercial off the shelf tool, IBM Optim Testing and Management Suite, and Testing and Privacy. We could solve protracted time loss from data base refreshes by creating right size data sub-sets, instead of loading a years’ worth of data to live through one day, just extract one day worth of data. This single aspect accounted for a large part of the time savings from hours to days to just minutes. From that subset we could then obfuscate, to mask the personally identifiable information (PII) sensitive information such as flight crew, passenger names and respective birth dates.

The key to our solution was using global date aging solution across all the orchestrated databases. This allowed us to create a nightly process that cleaned up the previous day of data and dynamically aged it forward one day, so that it was fresh again the next day: now we could live through the life-cycle of the flights that we had in our schedule. At this point in the process data becomes a database; platform agnostic, so that it’s flexible and can be used for multiple purposes, more of which will cover regarding our training environment in the overall benefits section.

There were some database design issues; cross database synchronization was a small challenge since primary keys and database constraints did not cross from one database to another. The products we were using, both tool-based and the ones internally developed, were able to derive data relationships across databases. This allowed us to extract a complete referentially intact sub-set out of multiple database systems. This extract contained the ‘sunny day’ scenarios. Now that we understood the data relationships we could generate synthetic data to capture irregular operations, edge cases, and other custom test scenarios into our overall test data management solution to provide complete end-to-end reliable repeatable data.

Service challenges and solutions

Alaska Airlines has a lot of partners who maintain their own internal test system for us to hook up to but that data is under the vendors control. Though, we might have access, it takes a lot of time spent submitting requests through the vendor to get our desired data loaded. It also requires a lot of time to set up and configure data across all the other applications to support the scenario. This data creation cycle might be one, two or more days before you get that full feedback loop to get the data in the state that you need. And after you’ve spent that time to get all that done, the data might be aged out so that you must start the cycle all over again.

Another element in our complex environment are data protocols, including multiple database platforms, different implementations, job apps… there’s a lot of complexity to these different services. And then there’s the fact that some things can be expensive to use with, perhaps, a ‘hit count’ cost which will bring up the cost of your testing and integration of applications.

We could overcome these service problems by using a service virtualization. Service virtualization allows the user to build out application dependencies that abstract from what the real service is so that you don’t have to consider the real service with all its downline dependencies. This allows the user to remove those constraints from the environment architecture. Alaska Airlines selected Parasoft Virtualize as their service virtualization tool due to its impressive speed for which systems can be virtualized, rich support of protocols, and ease of use.

For Alaska Airlines, we could virtualize some of these difficult to coordinate services. The biggest one that we did was the passenger counts which are stored in our Sabre partition. The raw passenger data is accessed via two internally developed services, one that gets the raw data from Sabre, and another that aggregates the values from the prior service. What does the life cycle of a passenger look like? We know, that passengers, shop, book, check-in, and board. We were running a thousand flights and every flight has a hundred or more passengers which is a lot of events to automate. If we were to automate this, it would be like simulating this flow of state changes across all passengers for all flights, essentially simulating the production level traffic loads in the test environment. But we didn’t have production level server resources in our non-production environment, nor did we have the raw data from Sabre. Though, we did have access to the production aggregated data. Using service virtualization, and data analysis, we created time based ratios to simulate what the passenger counts service would look like as the passengers booked, checked-in, and finally boarded. Since we solved this by pairing up data and service virtualization, users could update the values in the database, and refresh the UI thus closing the feedback loop for data requests. The final solution virtualized a SOAP XML HTTP synchronous using a MS-SQL backend simulating passenger lists from Sabre.

Even though passenger counts simulation was more involved, there were simpler solutions as well. One challenge was that the weight and balance system required load closeout acknowledgments to complete a weight and balance of an aircraft, but we lacked an isolated Aircomm instance, actual pilots, actual aircraft with ACARS units and satellite communication (SATCOM) to perform these tasks with in the test environment. Using service virtualization, we were able to simulate the pilot in the cockpit handling the Load Close-outs. We created a logic that would accept a Load Close-out message and would respond with an acknowledgement message with the correct acknowledgment ID to maintain referential integrity across systems. This simple flow allowed users to complete their test scenarios of the weight and balance system. The final solution virtualized XML and fixed width messages over Message Queue (MQ).

Event challenges and solutions

One of the last major challenges we faced was events, much like a real production environment, having the aircraft on the ground is not very useful. We needed to figure out a way to simulate the life-cycle of a day of our aircraft operation at Alaska Airlines. For instance, we needed to figure out, from a passenger perspective, how to book passengers, how to check-in, how to board… which we previously handled using service virtualization. In addition to passenger events, we needed to accommodate aircraft events to simulate the aircraft movement: we needed to know when the aircraft was out, when it was off, when it had landed, when it got back to the gate; also known as the out, off, on, in events (OOOI). To add to the complexity, the weight and balance system had timeline rules for business processes that we needed to accommodate for. In addition to these aircraft events, there were other manual processes that would require the input of a subject matter expert. So, given the resource constraints that we faced, we had to figure out a way to automate the processes that people were doing manually.

To solve our event problems, we did an event analysis that looked to look at one flight for a given day to determine what events happened and when they occurred this became our referential event model. Besides OOOI events we added estimated time of departure (ETD) and estimated time of arrival (ETA). We also captured more complex events such as aircraft ACARS initialization, pilot check-in, pilot FAR 117 fit for duty ACARS notification, generate flight plan, dispatch release, post departure close out, delays and cancellations, to name a few. We then multiplied our event model against our flight schedule to create a realistic set of events we could replay day after day. The most creative aspect of this event model is that we could add customizations for business rules and test cases. We could time shift events for our international market so that they happened earlier to follow business rules. We could time shift events to a later time, disable them, or duplicate them to test the time-based rules of the weight and balance system.

Now that we had a realistic set of events, all we had to do was develop some custom automation to perform these tasks. Initially we used an off-the-shelf product Parasoft SOAtest, but now we have evolved to a home-grown solution using Microsoft .Net Core 3.0. This eventing engine uses MS-SQL as a core data source, and executes web service events using SOAP XML over HTTP and REST JSON over HTTP. The eventing engine also executes SQL events via table updates and stored procedure executions to their respective databases using Microsoft SQL (MS-SQL) and IBM Informix. The engine also sends XML and fixed width messages via (MQ). With this in place, we can now live through the entire life-cycle of all flights for a given day, providing very realistic, accurate and repeatable eventing solution day over day.

OVERALL BENEFITS

We have several teams working on our weight and balance system; manual test, automated test, performance test, configuration, and training teams. All of them have differing data needs and thus each as their own isolated environment. Alaska has created distinct solution for each environment to support the needs of that environment. Our motto is the right data at the right time in the right place.

And we’ve reduced the risk of the costs of a data breach. One of the challenges we face as we continue to provide more exposure to the data across on-site and off-shore contractors. We also wanted to ensure that we would mitigate the risks for any exposure to actual sensitive data.

Our other solutions are stand-alone integrated rapid refresh training environment. The data is right sized, we’re not pulling in an entire year’s worth of data and then aging it; we’re just taking the one day that we need and that gives us is a super high-speed refresh. One of these training environments is able to be torn down and built up in ninety seconds. Also, we’re able to pick a new scenario and completely lay out all new data and we’re able to work with.

It has been a significant improvement over our old processes where we were doing complete refreshes that might take days, so this has all really helped us to expedite the processes. The other thing we can do is to be able to compare data in a pre- and a post-state as it evolves, so we can know that the business rules were being executed appropriately and tested appropriately as well. We’ve written above about the eventing and that rich production-like behavior. There are so many environments that we’ve simulated and it’s hard to summarize them all here but some environments are pure, having all the flows and hitting all the real systems, some of them are virtual with synthetic messages. Purely synthetic messages give the most flexibility, since not limited to what might happen naturally.

But what we’ve found as an unintended consequence of doing the testing was that a lot of these things that we had put together ultimately provided us with a rich data set to provide realistic training environments. We could then abstract all the dependencies that we had in our CERT environment except for MQ. We could eliminate the need for database servers, database management systems as well; and we were able to eliminate the need for application servers, web servers and, most importantly, we were able to eliminate the system’s dependency on our enterprise service bus for integration. We had set up a proxy to record all of the messages that were fed into our original system weight and balance system under test, which included the time stamp. Then we were able to do date time transforms on the xml messages and replay them directly into a training environment. This was great for environmental cost savings, however, the training team had greater desires for data than the other teams we had previously worked with. They wanted to train 10 students at a time against all scenarios, continually across multiple time slots a day.

This was a multi dimensionally scaling challenge. We solved the scenario challenges by extracting their desired scenarios for each aircraft type from the recording as a base data set.

Cloning of real aircraft to virtual aircraft allowed scaling, allowing each student to have multiples of their own virtual aircraft for all their training scenarios.

Rather than have a student sitting in a class with a six-hour flight waiting for the next opportunity to train, we needed to increase the frequency of flights. We solved this student downtime problem multi dimensionally. First from the multiple aircraft mentioned above, and again by compressing the duration of the flight scenarios. Some flight durations were reduced to 15 minutes, and some down to 1 hour depending upon the complexity of the training scenarios needs.

The weight and balance training environment became a very remarkable and unanticipated benefit of the overall solution. A total surprise to us was that we found out that over 6,500 front-line employees have been trained on weight and balance procedures in this environment.

There was one variation where we blend what we call engineered data that is under TDM control with actual naturally occurring data. It works by detecting what type of data it is, and then generates appropriate events for that specific data, and in addition dynamically routes it between virtual and real services. This allowed Alaska Airlines to support multiple teams co-existence within the same environment. This environment was a critical to the success of the integration of Alaska Airlines and Virgin America passenger service systems (PSS). We are continuing to develop this further to expand support for future cross team testing efforts.

One last point: much like the digital transformation this has been a transformation for Alaska Airlines; it’s been a journey and a journey that is not yet completed. And we’d like to extend the offer to share this further with readers. We’ve tried to compress five years’ experience into a few thousand words and that has meant that, of necessity, this has had to be a high-level overview but there will be readers who are not as far along the journey as Alaska and readers who are further ahead than us: we’d love to share ideas with both groups, especially in the topic of exotic testing scenarios. These techniques can be used it you’re an airline undertaking integrations or if you’re a vendor building solutions.

We hope this brief overview of our experience will prove useful to readers who are also treading the same digital path that we and many others are treading.

Contributor’s Details

Ryan Papineau

Ryan is an Automated Test Engineer who specializes in service virtualization to provide repeatable and consistent services to training and testing groups at Alaska Airlines. Before joining Alaska in 2011, Ryan undertook systems engineering and quality engineering work with several northwest companies with a focus on networking and mobile. He holds a Bachelor of Science in Business Management from University of Phoenix.

Jeff Crose

Jeff is a Software Development Engineer in Test who partners with development and quality assurance teams to provide high quality, right-sized test data sources for functional and performance testing. He joined Alaska Airlines in December 2013 where he took the lead on implementing a test data management strategy for the organization. Jeff holds a Bachelor of Arts degree in Political Science from Western Washington University.

Alaska Airlines

Alaska Airlines is the fifth-largest U.S. airline based on passenger traffic and has expanded significantly to serve several U.S. East Coast and southern cities plus nonstop service from Los Angeles to Costa Rica and Mexico City. Alaska Airlines, Virgin America and Horizon Air, are owned by Alaska Air Group. Alaska Airlines maintains a young operational fleet of 166 Boeing 737 aircraft, 71 Airbus A320 family aircraft, 35 Bombardier Q400 aircraft, and 26 Embraer 175 aircraft.

Comments (0)

There are currently no comments about this article.

To post a comment, please login or subscribe.