Articles

| Name | Author |

|---|

White Paper: Digital Towers and sharing useful information

Author: Andy Taylor, CSO Digital Towers AT NATS

Subscribe

Andy Taylor, CSO Digital Towers AT NATS explains the benefits of AI and data for ATC and airline operations.

Before we go into the meat of this white paper, it will be useful to give you a brief introduction to NATS, which readers will know as the main Air Traffic Services provider in the UK. We also support operations of airports, ANSPs (Air Navigation Service Providers) and airlines, from North America to Asia Pacific. I’ll focus on some of our work in the UK and, particularly, what we’re doing in places like Hong Kong and in New York La Guardia with Delta Airlines. I won’t simply look at remote Air Traffic Control towers as that’s a simple use case, taking a traditional operation and digitalizing it to enable the operation to be done remotely. That’s something that we can do with our partner organization, Ottawa, Canada based Searidge Technologies, wholly owned by NATS, which has been supplying remote towers for a considerable length of time. However, our focus now is on making digitalization more effective for all operational stakeholders at an airport as well as the traditional prime users of the system.

TRADITIONAL ANALOG SYSTEMS

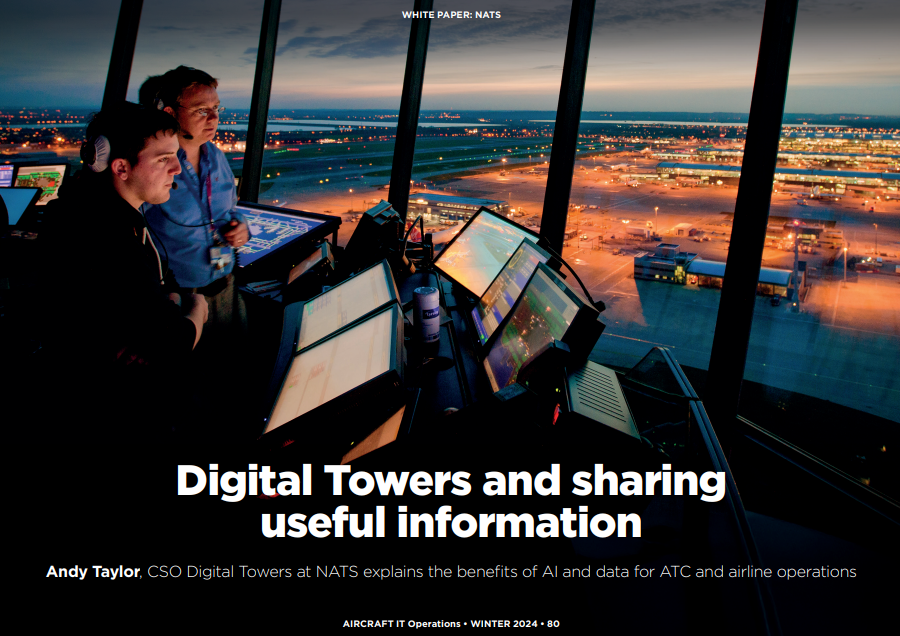

Today, in most airports, you will see the management of air traffic through a physical control tower and the air traffic controllers within that tower use analog data. Seventy percent of the information that they’re using to make decisions on your flights is gained by looking out of the window, it’s analog data (figure 1).

Figure 1

It means that, for an entire airport such as the image in figure 1 which is London Heathrow (but it could equally be any airport around the world), most of the information is gathered by looking out of the window. That means that, of the whole airport and, critically here, for British Airways and its Terminal Five operation, there are a maximum number of ten people on the airport that have access to the analog data on which key decision-making is based. So, regardless of all the scheduling and planning that’s taken place, with which NATS is very much involved at Heathrow (and a number of other airports), also the ’on the day’ planning and the tactical management of that plan, as soon as that aircraft is ready to push back, the ten people in the control tower now have access to data that no-one else has; each of those people in the tower accesses that data individually by looking out of the window and then comparing it with separate data on electronic systems. So, each person is effectively re-processing that data simultaneously and it is obviously not the most efficient way of operating in today’s digital age. It’s also not a way of operating as a fully coordinated operation. That is to say one where the plan, which was agreed pre- season, is then operated on the day as per the plan and, as far as tactical interventions are concerned, those are all agreed between the stakeholders; airport operations and, critically, from the readers’ perspective, airline operations.

My view is that we risk continuing to operate in a compromised environment with a significant amount of analog data available to very few people. We need to be able to do that not through simply replicating and remoting our digital towers but by using that additional digitized data to provide information and direction for each of the roles in the control tower as well as those operational roles beyond the tower. We need to take the duplicated data processing out of the humans’ workload and share that information and be able to make decisions collaboratively.

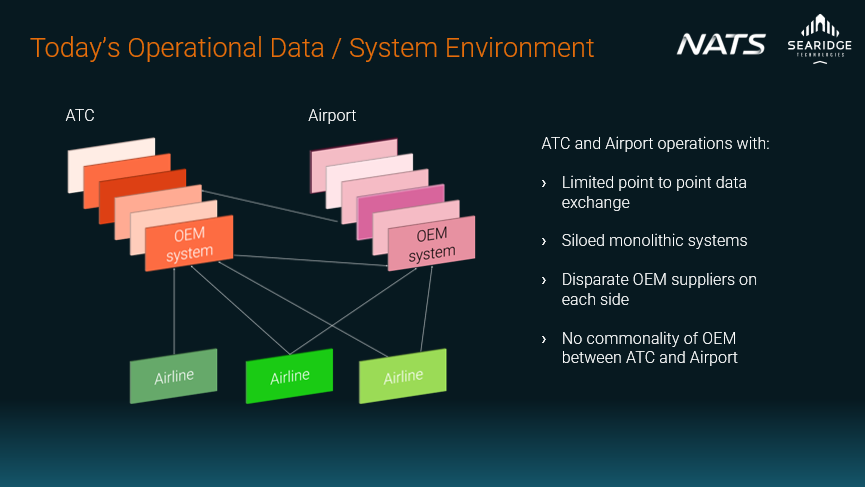

The data environment (figure 2.1) has the potential to be complicated, but I’ve simplified it for the figure.

Figure 2.1

Effectively, in the air traffic control environment inside the control tower there are many OEM systems in black boxes. There’s a similar number of boxed OEM systems on the airport side and likewise from the airlines’ perspective. In short, there are multiple systems from multiple vendors with point-to-point connections (figure 2.2).

Figure 2.2

These point solutions have limited connections, enabled through Interface Control Documents (ICDs), between them and there is a lack of shared data and holistic data management across those two environments (between the ATC and the airport) as a result. There is a similar problem in data sharing and coordination with the airlines as Air Traffic Control is feeding information into various parts of those systems too.

We have different OEMs to serve the ATC world from the ones that serve the airport world or the airline world. Effectively, those markets are siloed, and they’re siloed within the markets too. So, that doesn’t really help us in creating an optimal operational environment.

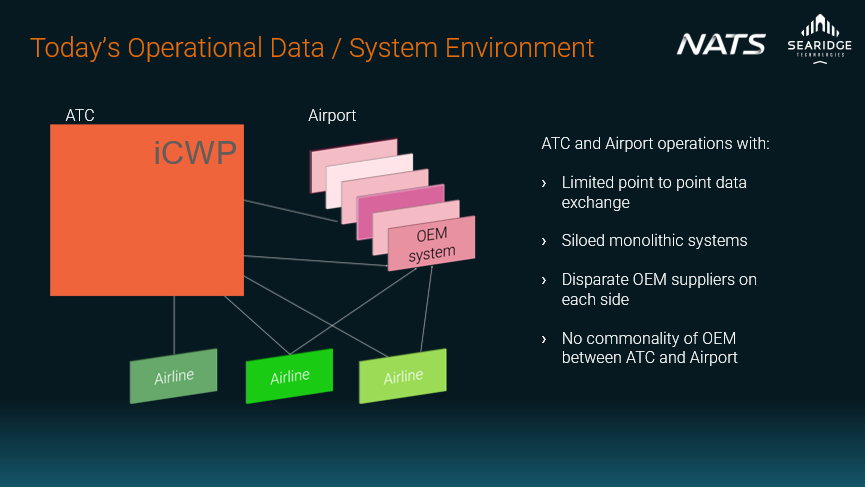

TODAY’S DIGITAL SYSTEMS

From an ATC perspective, OEMs are generally focused on Integrated Controller Working Position (ICWP); this is where all of those black boxes are broken up and put into one black box (figure 2.2 above), so, effectively, we still have the same kind of issues because 70% of the information is still outside of the window, analog and not included in ICWP – and it still requires the controllers to perform the task of analog data integrator in duplicate with each other. None of that is shared any more effectively to any of the airport operator’s stakeholders and likewise none of that data is shared any better to the airline operators.

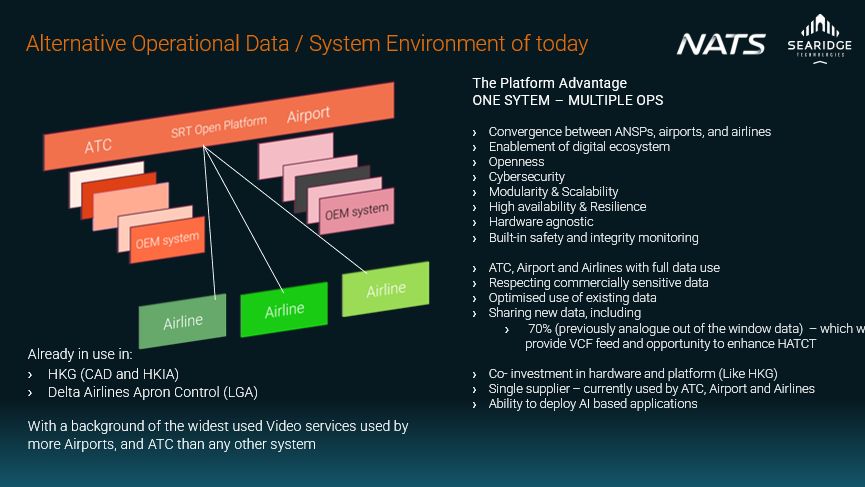

Where I see things, and what we’ve implemented in places like La Guardia, and specifically, Hong Kong, breaks across the two verticals (of airport and ATC) with a platform which is accessible and truly open; this is what our digital tower deployments now look like (figure 2.3).

Figure 2.3

Our partner organization, Searidge Technologies, and NATS have developed a genuinely open platform. We want as many vendors and start-ups as possible to come on to that platform to develop applications, to participate in a shared data environment, described earlier, and respecting commercial limits of what data airlines want to share, but having that holistic data area. We’ll look at some examples of how we’re doing that with Delta and in Hong Kong HKG later in this paper.

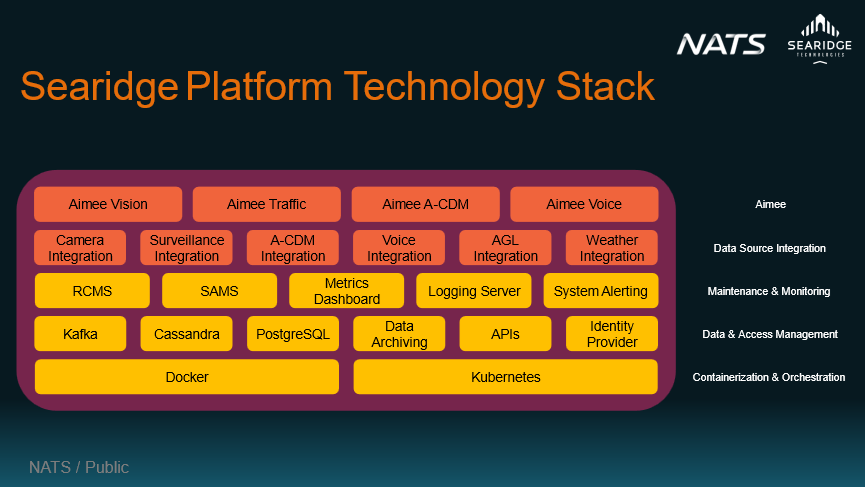

NATS invested in Searidge because it gave us an extremely useful capability to digitalize our current analog operations from an air traffic perspective, but it also meant that we could deploy a platform where we could bring other users in. Again, the figure simplifies that platform but effectively, with the different levels of that platform’s use, the key bit for me is the Aimee part, our AI engine, and the key area of focus for this paper (figure 3.1).

Figure 3.1

Diving deeper into the technology stack of our platform, we’ve got Aimee Vision, Aimee Traffic Aimee A-CDM and Aimee Voice (figure 3.2).

Figure 3.2

It pretty much does what it says in the name. Aimee Vision takes optical views, digitizing those and having the AI process them in real time. Aimee Traffic takes onboard various sensor data from radars and the like, analyzes that and uses prediction in real-time. Aimee A-CDM is a development area where we’re bringing in collaborative decision making; it’s the projection of where we’re going. Aimee Voice is the final part because, with communication between air traffic control and airline pilots being by voice and, likewise, between the controllers themselves and the other operations centers around an airport, voice communication is significant, so Aimee monitors voice communications and converts them to text which can be digitized for carrying out various algorithmic support.

I won’t labor on the cyber security behind this approach, although it is clearly a key part of it. As an air traffic service organization, security of data is incredibly important to us and it is a core principle for all data applications, legacy or otherwise. The key part of this paper, however, is that our trajectory is to move away from black boxes and on-site processing to using Cloud, including open cloud sources, for various parts of the data application.

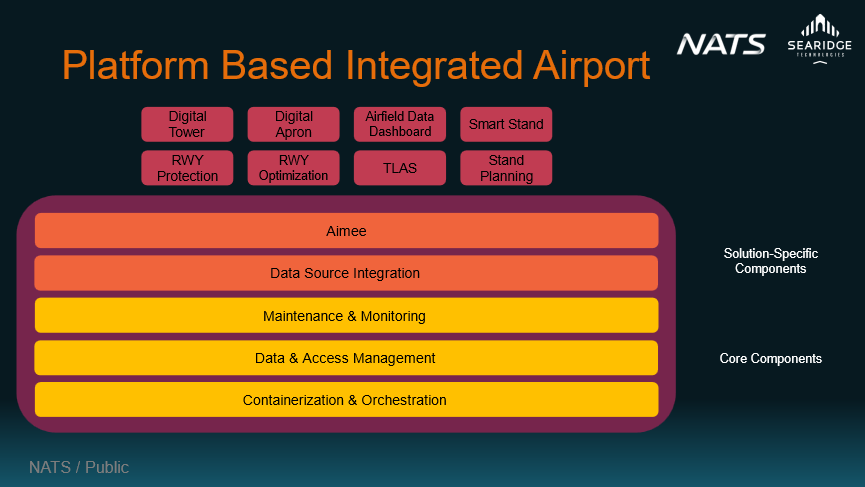

Coming back to the platform, based on that platform, we can deliver a number of capabilities for any users as applications, of which I’ve shown eight examples below for expediency (figure 3.3).

Figure 3.3

These can be for the airport, airline operations and for ATC operations.

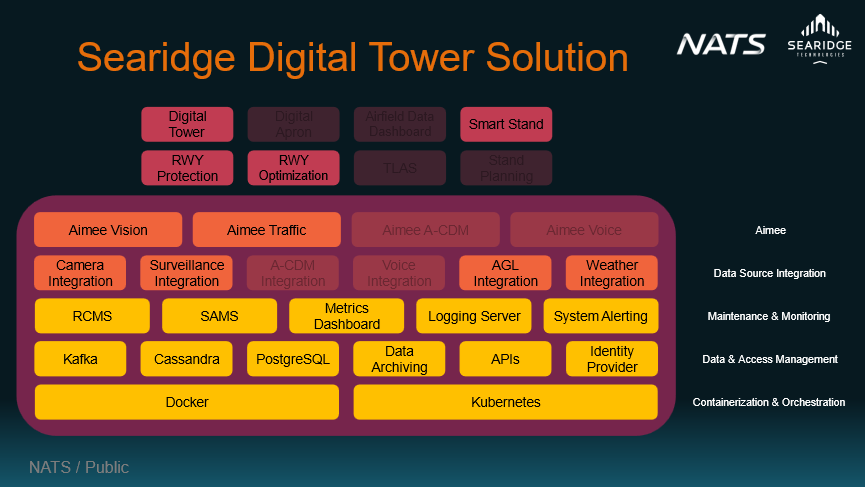

Just as you select applications that you want to use on your smartphone, in the example below, from an ATC perspective, we have applications selected for runway protection, optimization and managing aircraft on and off stands (figure 3.4).

Figure 3.4

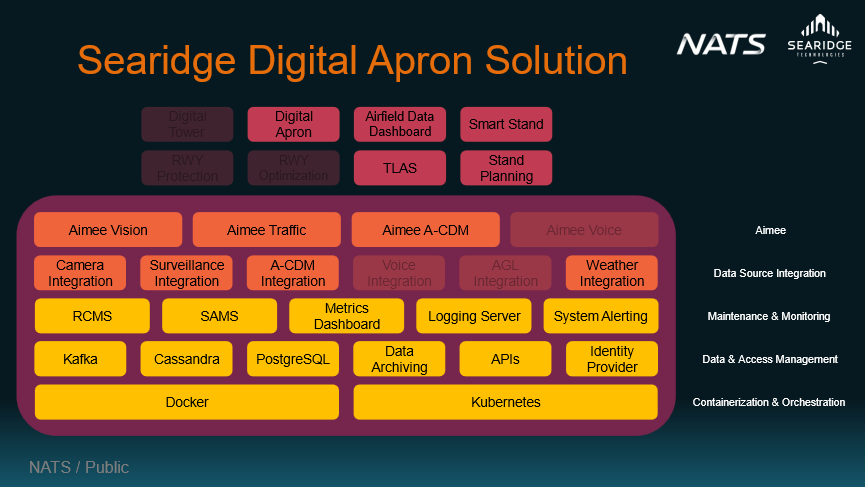

An apron control unit (figure 3.5), can either be operated by an airline or an airport or could be part of the ATC solution, depending on the way the tasks are segregated at that location.

Figure 3.5

So, whether we take a digital apron tower, ATC or another function, we can provide a different front-end and different applications to each, but it all sits on the same fully integrated data platform.

HKG: THE WORLD’S LARGEST DIGITAL TOWER

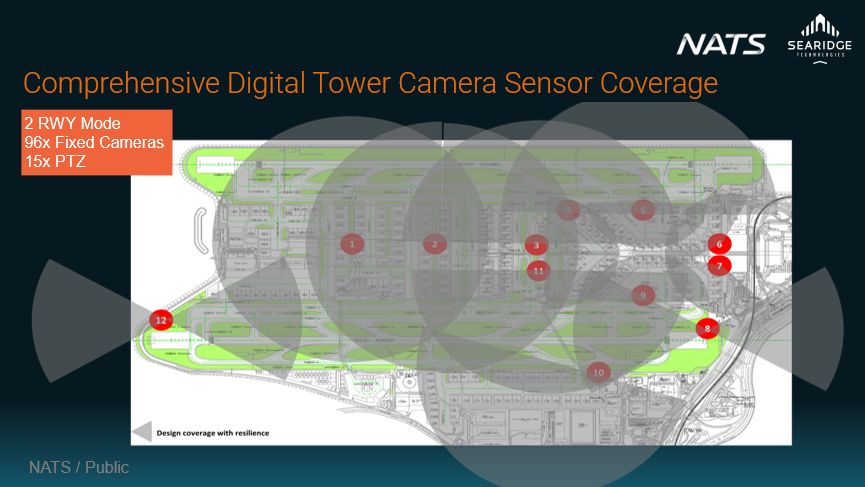

What follows is the practicality of what we have delivered to Hong Kong, which is One System, Integrated Operations and currently the world’s largest digital tower. Hong Kong’s aim, as it expands to three-runway airport operations, is to have greater resilience, improve its throughput capacity optimization and resources optimization, and adopt AI and new ways of working as well as data integration and enhanced collaborative decision making. They also have three physical control towers, so it’s important that those assets can be used in an optimal way, bearing in mind there are compromises when operating from any physical location, as there are parts of the airfield that are distant and parts that are even obscured. From my experience it is possible to ‘hide’ an Airbus A380 behind some of the terminal buildings at Heathrow, that means that the air traffic controllers alone can’t monitor that with their analog processes and so, when we digitized, this is how we’ve done it in Hong Kong (figure 4).

Figure 4

We have 96 cameras installed in a number of separate arrays. Each array is shown by a red dot. The cover isn’t limited to the diameter of the circles, but it basically shows that the system can ‘see’ any part of the airfield. As a result, nowhere on the airport is more than 500m away from an array of cameras and we put their images into panoramas, just as we do for remote digital tower solutions, so that we get a more contextualized view, rather than just looking at a number of separate ‘thumbnail’ pictures as would be seen in, say, a security control room where one is simply looking for intruders. With these arrays, we are looking across a wider area to understand exactly what’s going on in the context of other aircraft movements.

The system can also be expanded as the airport grows and, as the third runway comes online in Hong Kong, an additional number of arrays will be deployed which will take the camera count to something like 200 cameras on site. With such full coverage of the airside areas of the airport, these cameras can also provide real time feed for things like security and can replace some of the existing single use systems, like CCTV. If anybody is reading this and thinking, ‘this all looks very expensive’, the point of the investment is to use the asset for multiple purposes and to have multiple users of that asset so that the investment becomes shared. Likewise, it also takes out some of the black box solutions and potentially removes duplication on site.

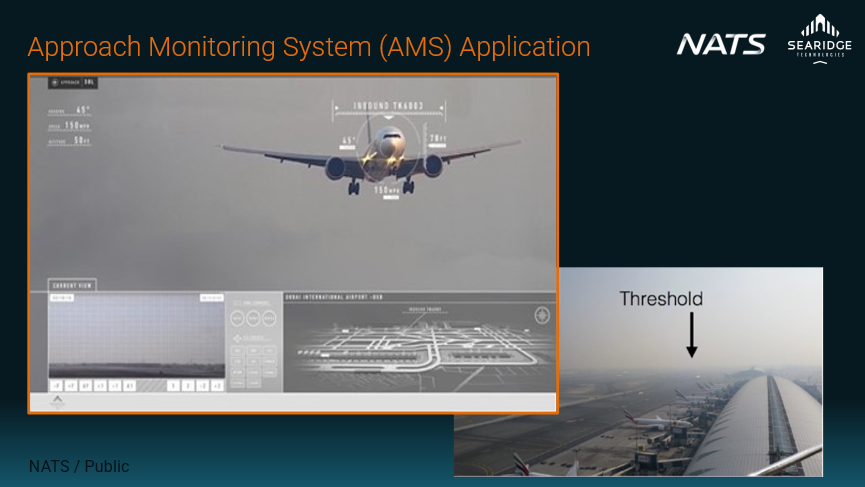

Some of the things that we can do with the coverage of these operational grade cameras include, for example, our Approach Monitoring System (AMS) application (figure 5).

Figure 5

While the picture is of our Approach Monitoring System deployed in Dubai this has also been deployed in a several other locations including Hong Kong and Singapore.

The image above shows you what the controllers can see from the Air Traffic Control Tower. It’s typical in that, as an Air Traffic Controller, you often can’t see the end of the runway (where it says ‘Threshold’), and so you can’t observe the aircraft touch-down until they’ve arrived on the runway surface and are rolling out. This is clearly a low level of analogue data fidelity that an air traffic controller has and, as I said previously, there are often only ten controllers who have this information so no-one else has even got that low fidelity view. The improved level of fidelity I can show through digitization here (see left hand image in Figure 5) is from those approach monitoring cameras. In this example, it is possible to see an aircraft six miles out on the approach, plus the system adds additional overlaid information for, in this case, the operations team, but it could also be the air traffic control team, with additional information about that arriving flight.

As an example of the improved fidelity, you can see that it would be possible to check that the aircraft’s flaps are down, its gear’s down. If you consider that the image is digitized data then, as soon as a human can see that level of detail, so can Aimee vision; and this is where the AI processing can come in and start to check every single arrival to make sure that it’s configured correctly. We can also tell the point at which the gear has been deployed, for example, at airports where they’re very noise sensitive and prefer to have gear up for as long as possible, that kind of thing can be monitored in real time.

INTEGRATED OPERATIONS CONTROL

Once you have all of the information on a single platform, we can integrate a large number of different functions and features, including the examples below from Hong Kong, and Delta’s Ramp Control in La Guardia, NY, USA.

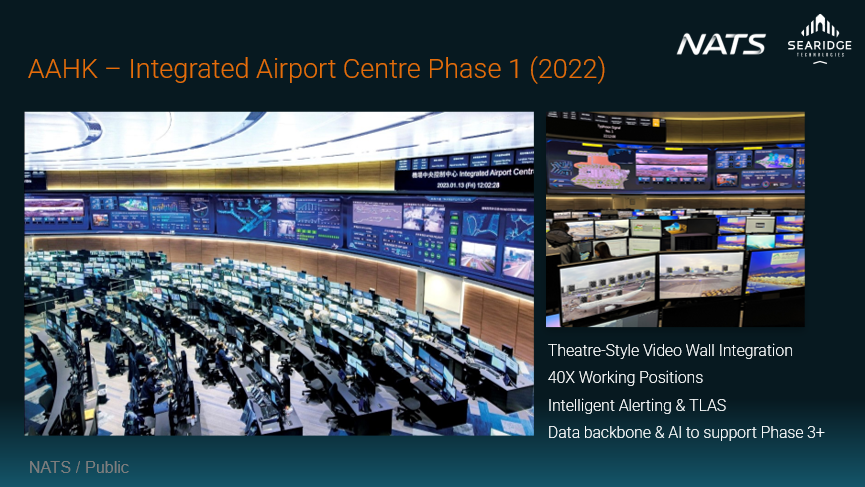

In figure 6 you can see the first phase of the project in Hong Kong (figure 6).

Figure 6

There’s not an air traffic controller in sight in this picture because this is the Integrated Operations Center at Hong Kong International Airport; it’s the airport company’s operations center which is the other key part of the control process for managing Air Traffic Movements on the airport, along with the more obvious three Air Traffic Control towers. This Airport Operations Centre is now seamlessly connected to the Air Traffic Control Tower; everybody has the same information and there are 40 positions with different roles inside that Operations Centre that now have access to this digital information. Access that only ten people used to have but that now more than 40 people in here also have. Effectively, everybody is ‘in’ the control tower, and they all have access to 70% more data, plus anybody on the airport that becomes a user of this system can also be granted access.

Let’s look a bit more closely at what, from an operations center and apron management perspective, can be done now in Hong Kong. In figure 7, is a panoramic view of one of the terminals.

Figure 7

You can see that each of the parking stands as well as being identified has also got overlaid information from the airport Collaborative Decision Making (CDM) system; this is the collaborative decision-making information that’s being generated. The target off-blocks time (TOBT) is input by the airline’s handling agent and the target start approval times (TSAT) are generated as a result. What you would normally see in a tabulated format you can now see in a contextual format. That is to say, the target off-blocks times can now be read while looking directly at each of the aircraft in turn and visually checking as to how likely it is that that target off-blocks time is going to be made. This means that you can spot a target off-blocks time failing earlier than you would do waiting for the CDM system to have the target off-blocks time updated. Often, due to the manual procedure used at airports which have adopted CDM, this update is made about a minute before the target off-blocks time is about to expire anyway. And target off-blocks times, just as an example, already have an element of uncertainty built in with Collaborative Decision Making as they have a minus five to plus five minutes window of tolerance built into them; so, that’s a ten-minute window of uncertainty. For anybody in ATC, (or any other part of operations control) that’s too wide for planning what movements we’re going to make. It can affect whether an aircraft can taxi or push-back and can have lots of negative or positive effects on the flight envelope, and that is from the moment the aircraft’s engines are running to being able to manage the stands more effectively.

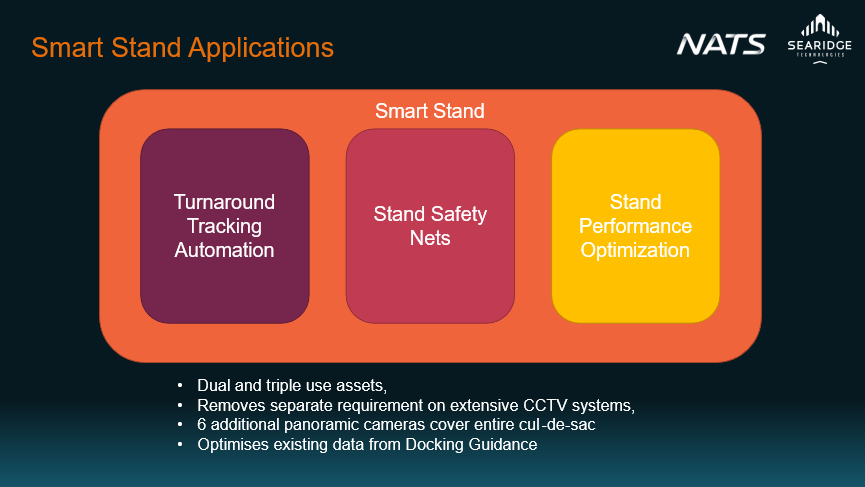

This contextual data-based operation is simply the first phase in HKG, because they now have access to information that they never had in the airport operations center, overlaid with their own data Their own data now becomes more powerful, and their capability likewise. However, as soon as we hand all this over into Aimee Vision, the AI engine described earlier which sits within the data platform, and it’s processing all the visual information that’s now digitized, we can also add other applications. One such application is called Smart Stand (figure 7).

There are parts of this application where we manage turnaround tracking, effectively making sure that each of the milestones towards the target off-blocks time is being checked autonomously, such as ‘when does the fueling start?’, ‘when is the baggage loading complete?’. We can check for safety nets, so these can be anything from ‘are people wearing PPE on the stand?’ to ‘did vehicles do brake checks as they approached the aircraft to ensure that they don’t hit it?’ But we can also then, in the future, bring in the CDM part to do stand planning and performance optimization.

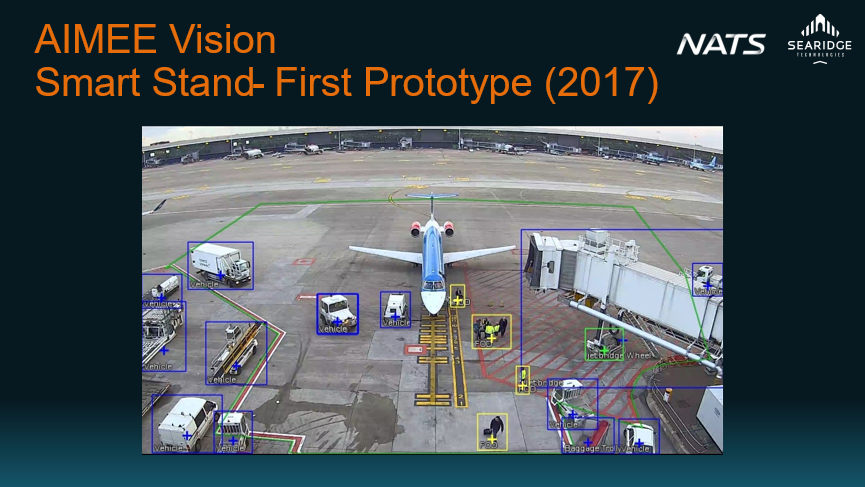

Our Smart Stand turnaround tracking has been uniquely developed with operational experience of managing turns within the overall ATM environment on airport and with the focus on improving current limitations of the CDM and safety management process. To do this, we moved away from more basic, commonly used, AI vision techniques, such as ‘box and follow’ where the AI looks for moving pixels, boxes around them and starts to carry out an assessment on that basis. We used that model back in 2017 and found that in certain use cases it was less effective than others. Figure 8 shows this initial boxing algorithm while figure 9 shows what Smart Stand looks like in terms of our higher performance AI Aimee Vision model.

Figure 8

The image in Figure 9 is from one of our panoramic cameras to give you an indication as to what the AI is doing; it’s not boxing, it’s identifying each of the objects and you’ll see how it puts a colored mask over each one to show you which type of object it is. You’ll also see various points where aircraft are pushing back or moving behind the aircraft that’s on the stand. The system can move to stand optimization in its next guise which is where its output will be cognizant of what’s going on behind the parking stand, not just on the parking stand. So, when aircraft push back, when aircraft are towed behind a stand, or when aircraft that are inbound come in and stop, temporarily behind an aircraft that’s on-stand, the Aimee AI system can inform other applications and users on the airport, including the airlines, to make them aware of the impact of those movements. Likewise, our aim is to turn this data and decision making around to start directing Air Traffic Control so that the operations plan is maintained rather than impacted.

You can also see how Smart Stand currently provides message output and there’s a very simplistic indication in this demo image where you can see that things like the green areas are being completed on time, where there’s an orange area it means that, at the moment, this is set to fail, and the point at which it’s aiming for is the target off-blocks time; the bags, at the moment, on this particular turn are running behind schedule. But, by monitoring every single parking stand, and having a genuinely open data platform we can take the data from the stand docking guidance as well as the panoramic cameras and other sources from ATC for example we can then run all that together to make it the most accurate assessment that’s been carried out. That means that we can also manage every single parking stand on your airfield, and, at the same time, we can flag to you specifically the ones that you need to watch, rather than you watching a wall of little thumbnails, or waiting for data to be manually input from out on the ramp. The output can be focused to trigger only those flights which you need to monitor and potentially manage.

SMART STAND SAFETY NETS

If you can track milestones, you can also track various safety events. For example, avoiding aircraft parking partially on stands while another aircraft attempts to pass behind them, resulting in a wingtip to tail collision which then becomes a costly maintenance issue as well as impacting the safety of those on and around the aircraft. Clearly, we want to stay away from that outcome so, having safety nets and advising Air Traffic Control and Airport Operations staff that an aircraft is not parked correctly and assist in reducing the potential for wingtip to tail collisions is a no brainer. Going beyond that, we work directly with airlines as well, so this provides an operations center with information to an ATC grade. In La Guardia, we took two physical ramp units that Delta Airlines operates from its own terminal to manage their own pushbacks, had to coordinate with each other as well as coordinating with the FAA tower as flights come out of or go into their ramp area. Searidge gave them access to map data, via the digital (tower) platform, which provides a whole airport, real time, ground surveillance picture to ATC standard; it means that they can see beyond their area of responsibility now and what’s about to affect them as well as how their pushbacks and taxi-outs will fit into the overall flow of the traffic. This is about making everybody more aware of the information that, as stated above, just a minority of people might once have had in the ATC Tower.

We also have stand management as part of this range of applications. Our system is currently semi-autonomous in that overnight, the stand plan will be generated automatically with reference to a set of local hard and soft constraints. Hard constraints are for example that only a finite range of aircraft types can be parked on a particular stand; while soft constraints are say that a particular flight is identified as a ‘premium’ flight, and it will always park near the VIP lounge. So, with these constraints in mind we can automatically produce a stand plan overnight, rather than somebody planning this manually. This plan can then be moved into the tactical phase. Tactically, the system operates semi autonomously based on providing insights as to whether an aircraft is late or running late in its turnaround and that the stand is going to be occupied for longer. It will then make tactical changes based on those hard and soft constraints and advise a human operator which rules are being impacted, though never suggesting any that would impact the hard constraints.

In summary, rather than it being all about Air Traffic Control, this is about all operational stakeholders having the same data and having it in a digital format so that more can be achieved with that digitized data. It’s convergence rather than siloing and it’s enabling a digital ecosystem that brings in new entrants, as airports and airlines have already done with other parts of the passenger experience. However, we can now take that right through to the airside areas and out into the taxiways where we optimize data. We give access to 70 percent of analog data that Air traffic Control has traditionally used. Bearing in mind that it wasn’t as useful as today’s digitalized version of that data in terms of presenting it directly or using it for processing and prediction. Access to AI-based applications, from my perspective, as an air traffic controller, is key to optimized operation of airfields. We’ve done everything we can in terms of the people in our operations; systems and AI support are the catalyst for making things more efficient and having a joined-up plan across the entire operation – ATC, airport and airline.

Comments (0)

There are currently no comments about this article.

To post a comment, please login or subscribe.